Questions about Russian President Vladimir Putin’s popularity are inextricable from discussions about Russia’s war on Ukraine. The Russian government has expended great effort selling the war to the Russian population, and there is speculation about the consequences of Putin’s shifting popularity for the survival of his regime. Indeed, recent polls show an increase in Putin’s apparent popularity. But the repressive nature of the Russian state raises questions about whether we can trust respondents in public opinion polls. Some respondents may fear that revealing their opposition to the president could lead to negative consequences and will therefore falsely state they support him. As a result, Putin’s actual level of support could be lower than responses to direct questions in public opinion polls suggest.

In 2015, we conducted analyses investigating this concern. Our study, which exploited a common technique to elicit sensitive opinions from survey respondents, suggested that Putin’s high approval ratings mostly reflected sincere support. In 2020–21, we investigated whether these results still held true. The recent analyses paint a more ambiguous portrait, such that there is considerably more uncertainty about Putin’s true support than was apparent in 2015. This uncertainty holds lessons for those who would use list experiments to measure the popularity of authoritarian leaders in Russia and elsewhere.

Putin’s Popularity in 2015

It is important to understand the method we use to estimate Putin’s popularity. The technique—widely employed to study sensitive attitudes or behavior—is known as an “item count” or “list” experiment. In our context, the experiment works as follows.

We randomly divide survey respondents in a nationally representative sample of the Russian population into two groups. The first, “control,” group is presented with a list of three politicians. The second, “treatment,” group receives the same list of three figures, plus Putin. Respondents in each group are asked how many—but not which—politicians they support. As respondents only tell the survey enumerator how many politicians they support, it is generally impossible to determine whether any particular respondent supports Putin. In the aggregate, however, support for Putin can be estimated as the difference between the mean response for respondents in the treatment and control groups, respectively. Random assignment ensures that any such difference is attributable only to the presence or absence of Putin on the list, not to characteristics of the respondents themselves.

For example, we used the following list experiment to estimate support for Putin in both 2015 and 2020–21: “Take a look at this list of politicians and tell me for how many you generally support their activities. Vladimir Zhirinovsky; Gennady Zyuganov; Sergei Mironov; [Vladimir Putin].”[1]

All of the figures on the list were relatively prominent contemporary Russian politicians at the time of our surveys: Zhirinovsky and Zyuganov were/are the leaders of the ersatz opposition Liberal Democratic Party and Communist Party, respectively, whereas Mironov is the leader of A Just Russia, a party that is also part of the “systemic opposition.”

In January 2015, respondents in the control group (without Putin) reported supporting 1.11 of the listed politicians on average. Respondents in the treatment group (with Putin) reported supporting 1.92 politicians on average. The difference between the two means (1.92-1.11=0.81) implies that 81 percent of survey respondents supported Putin, some five percentage points less than implied by the direct question—a difference that is not statistically significant. The list experiment thus provides little evidence that respondents were “falsifying” their preferences (not disclosing their privately held attitudes) due to fear of expressing opposition to Putin.[2]

In addition to this list of contemporary politicians, we also designed an experiment in which the control items were historical Russian or Soviet leaders: Joseph Stalin, Leonid Brezhnev, and Boris Yeltsin. We ran experiments with both sets of lists in January and March 2015, with approximately 1,600 respondents in each case. Table 1 reports the results from these experiments. After accounting for uncertainty, the estimates are remarkably similar—to each other and to the direct estimates—suggesting that support for Putin was largely genuine.

Table 1: Estimated Support for Putin in 2015 from Direct Questions and List Experiments

| Direct | Contemporary list | Historical list | |

| January | 86% (85%, 88%) | 81% (70%, 91%) | 79% (69%, 89%) |

| March | 88% (86%, 90%) | 80% (69%, 90%) | 79% (70%, 88%) |

In our original study, we conducted various auxiliary analyses to check for design effects. Although the differences between the estimates from our list experiments and direct questions are relatively small and statistically insignificant, they still hint at the possibility of “artificial deflation,” a generic term for a tendency to undercount list items that increases with the length of the list. To check for this possibility, we conducted a list experiment with world figures: Belarusian President Aliaksandr Lukashenka, German Chancellor Angela Merkel, former South African President Nelson Mandela, and (in the treatment condition) former Cuban leader Fidel Castro. The premise of this “placebo” experiment was that support for Castro is not sensitive in the Russian context. If true, any difference between the list and direct estimates of support for Castro would be evidence of artificial deflation rather than preference falsification. The results from our placebo experiment, in which estimated support for Castro was nine percentage points lower than implied by the direct question (60 percent), is thus evidence of artificial deflation in our list estimates.

In summary, our original list experiments in 2015 suggested that Putin’s popularity was largely real, with those small differences between the list and direct estimates more likely attributable to design effects than to preference falsification.

Putin’s Popularity in 2020–21

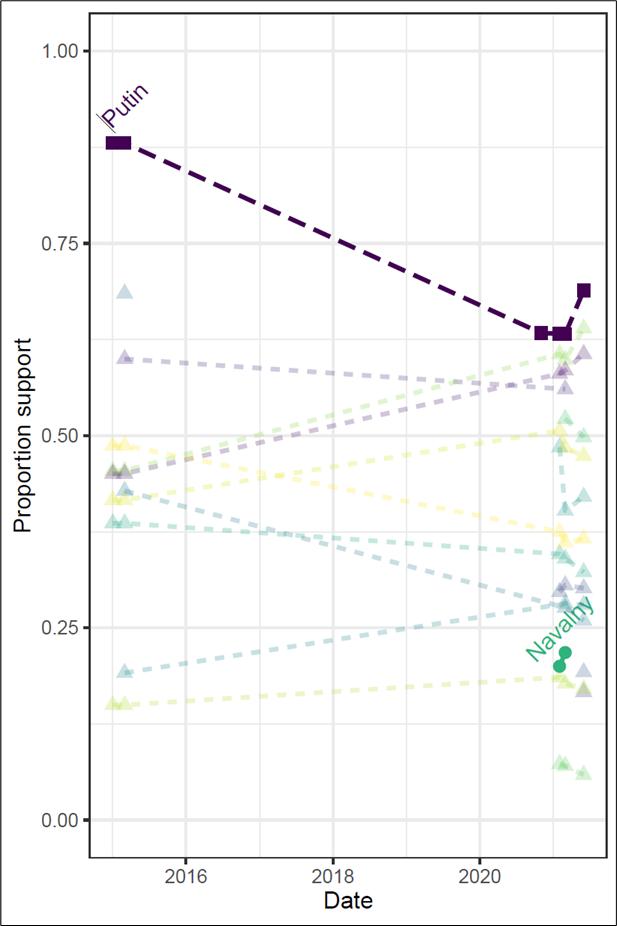

In late 2020 and early 2021, we revisited our analysis of Putin’s popularity. The Russian president’s apparent popularity had dropped dramatically from 2015 to 2020. As Figure 1 illustrates, this drop is unique among the various political figures for whom we have data in both periods.[3] Moreover, many aspects of Russian politics changed during this period. Perhaps most prominently, opposition activist Alexei Navalny was poisoned by Russian security services and left the country for treatment in August 2020. When Navalny returned to Russia in January 2021, he was promptly arrested, resulting in mass protests and the detention of many demonstrators. As the repressive nature of the Russian state became more apparent, so too might have the perceived risks of voicing opposition.

Figure 1: Change in Direct Estimates of Politicians’ Popularity between 2015 and 2020–21

As in 2015, we contracted with the highly regarded Levada Center to conduct list experiments on a nationally representative sample of the Russian population (in November 2020, and then again in February, March, and June 2021). To estimate support for Putin, we used the contemporary and historical lists described above. In one wave of the survey, we additionally employed a modified version of the list used to estimate support for Castro in 2015 (the “international” list), in which we replaced Lukashenka as a control item with the first president of Kazakhstan, Nursultan Nazarbayev.

Table 2 reports the results from our list experiments in 2020 and 2021; as before, there were approximately 1,600 respondents in each wave. Across survey waves, the list experiments suggest support for Putin 9 to 23 percentage points lower than implied by the direct questions—a generally greater difference than for any of the lists in 2015. If artificial deflation is of the magnitude inferred in 2015 (around five to nine percentage points), this suggests that true support for Putin fell even more over the preceding period than the decline in approval ratings from direct questioning suggest. Indeed, under this assumption, Putin’s true support could lie below 50 percent.

Table 2: Estimated Support for Putin in 2020–2021 from Direct Questions and List Experiments

| Direct | Contemporary list | Historical list | International list | |

| November 2020 | 63% (61%, 66%) | 54% (44%, 64%) | 50% (41%, 58%) | |

| February 2021 | 63% (61%, 66%) | 40% (31%, 49%) | ||

| March 2021 | 63% (61%, 66%) | 40% (30%, 50%) | 44% (36%, 53%) | |

| June 2021 | 69% (67%, 71%) | 46% (36%, 56%) | 48% (38%, 57%) |

We cannot, however, assess these results in isolation. As in 2015, we compare these results to a series of placebo experiments to check for design issues. In March, we repeated the Castro experiment from 2015, with the modification to the “international” list described above. In addition, in June, we conducted list experiments to estimate support for two other political figures we understand to be comparatively non-sensitive: Soviet leader Leonid Brezhnev and Communist Party presidential candidate and entrepreneur Pavel Grudinin.[4] As before, the idea is to use non-sensitive figures to determine whether the reduced support for Putin that we observe in the list experiment is a consequence of preference falsification or some design effect.

Table 3 reports results from these analyses. Across all three placebo experiments, the difference between the list and direct estimates is greater than for the Castro experiment in 2015—in two cases (Castro and Brezhnev), dramatically so. As discussed, we have no strong reason to believe that support for any of the three treatment figures—Castro, Brezhnev, and Grudinin—would be politically sensitive. If anything, support for Grudinin might work in the other direction, with respondents hesitant to express support for a quasi-opposition figure, implying estimates from the list experiment higher than from the direct question. We therefore conclude that there is substantial evidence of artificial deflation in the list experiments from 2020–21.

Table 3: Estimated Support for Placebo Figures in 2021 from Direct Questions and List Experiments

| Castro (March) | Brezhnev (June) | Grudinin (June) | |

| Direct estimate | 56% (54%, 59%) | 61% (58%, 63%) | 30% (28%, 33%) |

| List estimate | 34% (25%, 44%) | 39% (30%, 47%) | 18% (10%, 26%) |

As a final wrinkle in these analyses, we also ran list experiments to estimate the popularity of opposition figure Alexei Navalny. In contrast to the placebo figures, it is very plausible that support for Navalny is sensitive: our study was conducted just after his return to the country and arrest in January 2021. Thus, as with Grudinin, but much more strongly, we might expect the lists to reveal higher support for Navalny than the direct questions do.

Table 4 presents results from the Navalny experiments. We use two lists to estimate his support: the contemporary list we used for Putin and a “society” list that includes conservative filmmaker Nikita Mikhalkov, socialite and opposition figure Ksenia Sobchak, and Grudinin. We repeated the latter experiment a month later. In each case, the list estimates are close to those from the direct question. Indeed, in two of the three experiments, the point estimates from the list experiments are marginally higher than from the direct questions, in line with our prior belief that the sensitivity of support for Navalny would lead to underreporting of that attitude when asked directly. If we assume that the list estimates artificially deflate actual support for Navalny, as may be the case with Putin and the placebo figures, then his true popularity could be higher yet.

Table 4: Estimated Support for Navalny in 2021 from Direct Questions and List Experiments

| Direct | Contemporary list | Society list | |

| February | 20% (18%, 22%) | 21% (12%, 31%) | 15% (8%, 23%) |

| March | 22% (20%, 24%) | 23% (15%, 30%) |

Interpretations

There are three primary interpretations of the results of our various experiments from 2015 and 2020–21:

- In both 2015 and 2020–21, the list experiments roughly capture the actual population-level support for different politicians. If true, Putin’s support dropped from 2015 to 2020–21 to a far greater degree than implied by direct questioning. Although plausible when viewed in isolation, a further implication of this interpretation is that, in 2020–21, there was substantial preference falsification for various political figures for whom we had little a priori reason to suspect sensitivity. Moreover, this interpretation would lead us to believe that expressing support for Navalny in 2021 was not sensitive, given the similarity of list and direct estimates of his support. Together, these implications cast doubt on this interpretation.

- There is substantial artificial deflation in our list experiments in 2020–21, and this deflation is of considerably larger magnitude than in 2015. If true, Putin could be roughly as popular as direct questioning suggests in both periods, whereas Navalny (with roughly similar direct and list estimates of support) might be much more popular. This view is supported by the large apparent artificial deflation in estimated support for various figures we presume were non-sensitive in 2020–21. This interpretation is plausible, though we do not fully understand its root causes if true.

- Artificial deflation in the list experiments differs from one politician to the next or follows some unknown process related to the politician’s underlying popularity. This interpretation is also plausible, though if true implies that we do not have sufficient information to estimate the popularity of any politician with the list experiments we discuss here.

Other Context and Recommendations

Our earlier work has been much referenced as evidence of the reliability of public opinion polling on Putin’s popularity. We remain broadly confident of our conclusions from 2015, but our recent experience suggests caution about the use of list experiments more generally to measure the popularity of political figures, and perhaps other political attitudes and behavior. As we anticipate that other scholars of Russia will gravitate to such designs in response to the increasing criminalization of dissent and associated concerns about preference falsification, we provide here some context and recommendations.

First, although we used the same survey firm (Levada Center) in both 2015 and 2020–21, there was a potentially consequential change in survey mode, from pen/paper to computer-assisted personal interview (i.e., tablet). We do not know why this would matter, but it could have.[5] In addition, in 2015, we did our own randomization, whereas, in 2020–21, Levada did the randomization. We see no evidence that Levada’s randomization failed, but this is again a difference in implementation.

We additionally provide two recommendations to scholars who are considering similar research designs.

- The use of placebo experiments should be standard practice for list experiments, especially those intended to gauge the popularity of political figures. Absent supportive evidence that artificial deflation is not biasing list estimates, scholars should not assume that any difference between direct and list estimates represents preference falsification.

- In our original study, we also used direct questions about control items to explore the presence of floor and ceiling effects. As with placebo experiments, the inclusion of such direct questions should be standard practice in list designs.

When used with the diagnostics that Graeme Blair and Kosuke Imai discuss, such practices can minimize the risk of drawing unwarranted conclusions from list experiments. Indeed, had we not followed these practices ourselves, we might have made very strong—and potentially very wrong—claims about the extent of preference falsification and level of support for Putin in 2020–21.

[1] The question wording mirrors the analogous direct question about Putin’s and others’ support.

[2] In the Appendix, we provide confidence intervals for the difference between direct and list estimates of support. Under the assumption of no design effects, this difference represents preference falsification.

[3] Besides Putin, these figures are those whom we included in the various list experiments described previously in the text: Brezhnev, Castro, Mandela, Merkel, Mironov, Stalin, Yeltsin, Zhirinovsky, and Zyuganov.

[4] The Brezhnev experiment used a modified version of the historical list, replacing Brezhnev (now the “sensitive” item) with the final Soviet leader, Mikhail Gorbachev. The Grudinin experiment used a design similar to that we describe below for the Navalny “society” experiment, with control figures Alexei Kudrin (a regime-affiliated liberal economist), Nikita Mikhalkov, and Ksenia Sobchak.

[5] A related project found evidence of artificial inflation (as opposed to deflation) of the sensitive item (Putin) in a list experiment similar to those described above, but with online samples. Although this runs counter to what we observe with the CAPI surveys here, it highlights the potential importance of survey mode and the importance of diagnostics (in this case, using direct questions about control items).

Appendix

Shown is the estimated difference between direct and list estimates of support for Russian political figures, as calculated using the predict function from the R package list. Under the assumption of no design effects, this difference represents preference falsification. In each table, values in parentheses represent 95 percent confidence intervals, whereas bold denotes differences for which these intervals do not include zero.

Appendix Table 1: Estimated Difference between Direct and List Estimates of Support for Putin

| Contemporary list | Historical list | International list | |

| January 2015 | -6% (-16%, 5%) | -7% (-17%, 3%) | |

| March 2015 | -8% (-19%, 2%) | -9% (-18%, 0%) | |

| November 2020 | -9% (-19%, 1%) | -14% (-23%, -5%) | |

| February 2021 | -23% (-32%, -14%) | ||

| March 2021 | -24% (-34%, -13%) | -19% (-28%, -10%) | |

| June 2021 | -23% (-33%, -13%) | -21% (-31%, -12%) |

Appendix Table 2: Estimated Difference between Direct and List Estimates of Support for Navalny

| Contemporary list | Society list | |

| February 2021 | 1% (-8%, 11%) | -5% (-12%, 3%) |

| March 2021 | 1% (-7%, 8%) |

Appendix Table 3: Estimated Difference between Direct and List Estimates of Support for Placebo Figures

| Castro | Brezhnev | Grudinin | |

| March 2015 | -9% (-19%, 2%) | ||

| March 2021 | -22% (-32%, -12%) | ||

| June 2021 | –22% (-31%, -13%) | -12% (-20%, -4%) |

Timothy Frye is Marshall D. Shulman Professor of Post-Soviet Foreign Policy and in the Department of Political Science at Columbia University.

Scott Gehlbach is Professor in the Department of Political Science and Harris School of Public Policy at the University of Chicago.

Kyle L Marquardt is Associate Professor in the Department of Comparative Politics at the University of Bergen.

Ora John Reuter is Associate Professor in the Department of Political Science at the University of Wisconsin–Milwaukee.